Running a VMware vSphere Metro Storage Cluster (VMware vMSC) makes it possible to move VMs between two geographical locations without downtime. In a Metro Storage Cluster a vSphere Cluster consists of ESXi hosts from both locations with shared storage that is stretched over these locations. Using a VMware Metro Storage Cluster makes your environment even more resilliant against failures and can help you get up and running again much faster should a disaster occur.

To get more information on VMware Metro Storage Cluster read the following articles:

Implementing vSphere Metro Storage Cluster (vMSC) using HP 3PAR Peer Persistence (2055904)

Implementing vSphere Metro Storage Cluster (vMSC) using EMC VPLEX (2007545)

VMware vSphere Metro Storage Cluster Case Study

(In this post I used an example and image from the VMware vSphere Metro Storage Cluster Case Study)

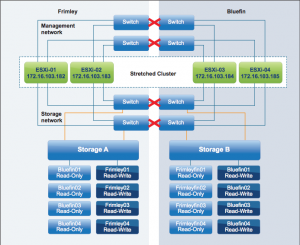

While a Metro Storage Cluster offers resilliance in many failure scenario’s, a datacenter pration can still cause downtime to your VMs. This is because a stretched VMFS volume is in reality a virtual VMFS volume based on a read-write volume in one datacenter and a read-only volume in the other datacenter. When a datacenter partition ocurs the virtual VMFS volume will only be accessible by the ESXi host on the Read-Write site. When this partition happens, it could potentially bring down VMs that are running “cross datacenter”.

(Image taken from the White Paper “VMware vSphere Metro Storage Cluster Case Study”).

For example, looking at the image above: A VM is running on ESXi-01 and is using datastore Bluefin01. Usualy this is fine as the IO’s from the VM are handled by storage A and the storage Metro Clustering software (for example EMC VPLEX) will make sure the IO will also be written on storage B. When a partition of the datacenter will happen, the datastore Bluefin01 will become invisible on ESXi-01 / Storage A and only visible and write-able on storage B, which will cause the VM to lose connection to its disks.

To prevent these scenario’s from happening, usually DRS host-to-VM affinity rules are used. VMs that reside on the Bluefin datastores are connected to ESXi-03 and ESXi-04 through a host-affinity rule. In theory this should be enough but in practise we noticed that now and then admins would forget to add new VMs to those DRS rules or storage VMotion from a Bluefin VMFS to a Frimley VMFS without adjusting the DRS rules.

To keep an eye on changes like these and prevent unwanted down time, my colleague Peter Lammers and I used vCenter Tags and PowerShell to make sure a VM is always on the correct datastore and host. Each morning a PowerShell script is run that checks for a matching VM location tag, datastore location tag and host location tag.

VM location tags, datastore location tags and host location tags

For the location tags to work, fire-up the good old vSphere Web Client (hehehe). Go to the “Tags” section, click on “Categories” and create a new categorie named: “Location” with a cardinality of “One tag per object” and select “Associable Object types” for Datastore, Host and Virtual Machine. Next click on “Tags” and create two tags connected to the Location category, in this example “Bluefin” and “Frimley”.

Next in the vSphere Web Client, select the datastores from “Frimley” and add the “Frimley” tag. For “Bluefin” add the “Bluefin” tag. Do the same for the hosts and the VMs.

When you’re done, you can use this script to make sure that VM, datastore and host are on the same location.

$Report = @()

# Get all VMs

$VMList = Get-VM

ForEach( $vm in $VMList )

{

# 1 get VM Location tage

$VMLocation = Get-TagAssignment -Entity $vm -Category "Location"

# 2 What datastores is the VM running on?

$datastores = Get-Datastore -RelatedObject $vm

ForEach( $datastore in $datastores )

{

# Get the location of the datastore

$DSLocation = Get-Datastore -Name $datastore | Get-TagAssignment -Category "Location"

# Get the location of the host

$HostLocation = $vm.Host | Get-TagAssignment -Category "Location"

$row = "" | Select Name, Location, HostName, HostLocation, DSName, DSLocation, ResultDS, ResultHost

$row.Name = $vm.Name

$row.Location = $VMLocation.Tag

$row.HostName = $vm.Host

$row.HostLocation = $HostLocation.Tag

$row.DSName = $datastore.Name

$row.DSLocation = $DSLocation

if( $VMLocation.Tag -ne $DSLocation.Tag )

{

Write-Host $vm.Name $VMLocation.Tag $datastore.Name $DSLocation.Tag "Incorrect storage assignment"

$row.ResultDS = "Incorrect storage assignment"

}else{

$row.ResultDS = "OK"

}

if( $VMLocation.Tag -ne $HostLocation.Tag )

{

Write-Host $vm.Name $VMLocation.Tag $vm.Host $HostLocation.Tag "Incorrect host assignment"

$row.ResultHost = "Incorrect host assignment"

}else{

$row.ResultHost = "OK"

}

$Report += $row

}

$Report

Interesting idea Gabe

So how do you make sure the new VM is tagged correctly? How do you make sure your administrators don’t forget to tag the VM location during creation?

Have great memories working on this paper. :)

Hi, thanks!

Maybe I should have mentioned this, but VMs are deployed automatically through a script with a set of default settings inside the VM, like SNMP, anti-virus, etc, but also a set of attributes and tags at VM level.

Gabrie

Hi, Very stupid question but when I run the above I receive The term ‘<' is not recognized as the name of a cmdlet. If I comment out the line the script doesn’t do anything. Any ideas ?

You’re right. There was something wrong in my script / wordpress formatting. I corrected it, can you test it again?

To achieve more or less the same thing + automated fixing. We created a powershell script that checks to see on which datastore a VM has its disks. It derives the Site preference from the datastore name, and then makes sure the VM is in the correct DRS affinity group.

The idea here is that we ‘balance’ the metro-cluster based on the storage location. The storage location for the VM is always leading. So once we storage-vmotion a VM, the DRS rules will always follow and reflect where the VM lives and make sure there is alignment.

We run the script every night, and when it does, you see newly created VMs vmotioning to the Site where their storage is primary.

Thank you for your comment. Feel free to post the script.

Great article..

Was wondering of an easy check script…

Lets say we want to verify a VM tagged with SiteA, is in DRS Affinity Group SiteA, if not it reports back failed.

Shouldn’t be too hard based on the script that I already provided. You can’t tag a DRS rule, but you could use special naming in DRS rules to match them with a tag.