Did you know about VMware HA admission control coupled to VM reservations? To be honest I thought I knew, but recently I was pointed to some details that showed me I was wrong.

What I’m talking about is the cluster setting of “HA Admission Control” and how “Percentage of cluster resources reserved as failover spare capacity” and “Host failures the cluster tolerates” are related to the CPU and memory reservations at VM level. These settings will make sure that your HA cluster will reserve enough resources to recover from host failures, depending on how high you set the % of resources to be reserved, more host failures can be tolerated.

Where did I go wrong? Well I thought vCenter made the calculations for the HA spare capacity based on real usage, using 5min interval. But I was wrong. These calculations are not based on real life numbers but on the reservations you set at the VM level. Same goes for the “Host failures the cluster tolerates” setting, the slot size is based on the reservations being used per VM.

Auch, I felt a little embarrassed being wrong in this. Especially since I normally checked on reservations of VMs being set to zero if there was no special need for a reservation. But as I started asking around to people on what they used for their VM reservations, I learned that not many were using these VM reservations and more people then I expected also had the wrong idea about this.

So, to be clear once and for all:

The values used in calculations for “Host failures the cluster tolerates” and “Percentage of cluster resources reserved as failover spare capacity” are based on the CPU and memory reservations set at VM level.

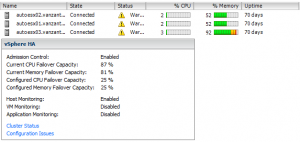

And to proof that setting no reservations can overload your cluster, have a look at my lab environment where I have set NO reservations on any of the VMs. My three hosts have 8GB of RAM each and when you look at the current load, you can see that my current memory usage is 52%, 52% and 92% which makes a total of 15.7 GB of RAM in use of the 24GB I have in my lab. Which is 65%. Now the vSphere HA status box on the summary page of the cluster shows that I have a “Current Memory Failover Capacity” of 81%. Anyone can see that’s not right. If you’re asking why the 81% and not a full 100%, those 19% are lost on VM memory overhead. But it might be clear that I can’t power on another 81% of 24GB = 19GB of VMs and still have 25% spare HA failover capacity.

For details on how the calculations are made, check Duncan’s VMware HA DeepDive Guide, a must read.

Now I have two questions for you, please respond in the comments:

- The “make me feel a little better” question: Did you know about this?

- What is the default reservation on CPU and RAM you are using in your environment. Of course, special VMs will have different requirements, but as a ‘rule of thumb’ what is the % of reservation you set?

Update:

- Frank Denneman responded to my post on his blog: THE ADMISSION CONTROL FAMILY

- Chris Colotti also responded to my post on his blog: Bad Idea: Disabling HA Admission Control With vCloud.

- My colleague Menno De Liege wrote a post on how to easily change the reservation of a VM: HA Admission Control: Base VM reservation on percentage.

Remember that even a VM set with ZERO reservation still has overhead reservations. For example a 2 CPU 4GB VM will still has about 250MB of overhead reservation. So if you do set zero reservations per VM, the overhead will be what is used to work these calculations. THe bigger the VM the bigger the overhead, so a 32GB monster VM needs roughly 1GB of overhead even with a 0% reservation set. HA will use whatever reservation is available, (Set or overhead) to manage HA. If you DO set reservations I am pretty sure it will use the SET reservation PLUS overhead since on HA failure you need BOTH to power on a VM.

Great post a lot more people miss this than you think, but don’t forget about overhead. Then put this in context of vCloud with HA and you have an even more interesting discussion since vCD does potentiall set set a lot of reservations in PAYG and Allocation Pool.

1) Yes I knew about this, and you should have known as well! Read the book or the many blog posts I have written about it by now :-)

2) HA Admission Control is all about ENSURING your virtual machines can be restarted when a failure has occurred. It is not about guaranteeing the same set of resources.

3) VM Memory Overhead is reserved, hence the reason it is subtracted from the total amount of resources. Also for CPU there is no such a thing as overhead and by default 32MHz (5.0 and above) or 256MHz (4.1 and below) is used.

HA will always use “configured reservation + overhead reservation” indeed. The reason for this being is because a VM can only be powered on when its “reservation” can be guaranteed on the host.

I didn’t know about this before I started teaching vSphere courses.

I usually still recommend my customers not to set individual VM reservations, since it’s (IMHO) too much micro managing, hence time consuming.

Does that mean that in a cluster with no reservation, admission control is almost inefficient in the sense that it will only stop you from powering on Vm when there’s not sufficient resources to cover the next VM reservation (=when you are already on the verge of disaster?)

Does that mean that you should set reservation (like 10% for exemple for production VM) just to cover this angle?

Yes, you’re correct. About setting the minimum reservation, I’m still having a discussion with Frank Denneman :-) Hope he’ll write a good blogpost on this.

Very important and very interesting topic then !! I must leave now I have urgent modifications to do on my production ;-)

There is also something that was valid in vsphere 4.1 (saying that, as i have not checked v5.0), referring to das.slotCpuInMHz and das.slotMemInMB. these are the custom values that you can set for how much a slot size would be, ignoring reservations. I have found myself using these instead of letting vmware HA take into account my reservations. we’ve been running MSCS clusters in the same cluster as non clustered machines and those Vms are 8GB (on 48GB hosts) so that whole math added up that we could not use our full capacity.

In my view the best way would be like this:

1. setup a cluster, setup vms, with or without reservations

2. few months later take a measurement of your memory usage and cpu usage (either from vcenter metrics or monitoring systems) and set the average values as your slot size. This would probably work best for medium/high number of hosts/VMs since the average will be more relevant than for few VMs.

We reserve by default (using a script) half of the VM memory for production clusters. If you reserve less than this you get into all sorts of bad performance problems if hosts get overcommited too much and suddenly a dormant VM wakes up and wants to use the memory.

Excellent! I’ve wondered for months why my ‘Current CPU Failover” was at 96%!

Question. If Cluster, HA, Admission Control setting is set to Disable: Allow VM power on operations that violate availability constraints. Does that override Memory Reservations?

Correct, although “override” is the wrong word. Memory reservations are then not taking into considerations when determining if a VM can be powered on or not. So, even if powering on that VM would cause a lot of memory pressure, it would power on the VM.

Can I send you an email? I have a screenshot of this NOT happening. We have many VMs that have reserved memory. We tried to power on new vms and we got an error. What is your email?

The error that popped up was “No compatible host has sufficient resources to satisfy the reservation.”

Big bucket of deny on the power on virtual machine operation.

Version 6.0.0 3620759

Yes, email me at “thegabeman” with the boys from gmail :-)

Keep in mind that apart from HA, a host won’t let you power on a VM if it can’t give the VM the reserved memory !!!

Example:

4 hosts with 100GB physical RAM. HA has admission control ENABLED and you have set 1 host for failover. If you have VMs with reserved memory, you will only be able to power on VMs for a total of 75GB reserved memory. (Not 100% correct, since every VM also has some memory overhead that is always reserved memory).

If you DISABLE admission control you will be able to power on VMs for a total of 100GB reserved memory (again, leaving out the overhead in this example). It now is not HA that is limiting you, it is the hosts itself that will be limiting you. Since reservation means that the host WILL provide physical memory for the VM, whatever happens. So, if all VMs on the host have all their memory reserved and there is no more physical RAM left, ESXi will not let you power on another VM.

If you don’t reserve any memory on the VMs, you could power on maybe 500GB of VMs, but you will probably have a lot of memory swapping to disk. Which is bad.

Does this answer your question?