My latest customer was still running on VMware ESX 3.5 and Virtual Center 2.5 and asked me to upgrade their environment. Their whole environment was running on NetApp storage and for ESX there is a change in the Storage Array Type Policy (SATP) and the Path Selection Policy (PSP) when moving from ESX 3.5 to 4.1.

I started with this blogpost by Nick Triantos: vSphere: Upgrading from non-ALUA to ALUA. I have added some extra’s I encountered during the upgrade and will show how the change can be done using the new NetApp Virtual Storage Console (VSC).

Very first step is of course the upgrade (actually I did a reinstall) of Virtual Center to vCenter 4.1, followed by a fresh install of the ESX hosts. I switched from ESX 3.5 boot from SAN, to ESXi 4.1 booting from USB sticks. When all your VMs are running on the freshly installed ESXi 4.1 hosts, your storage policy is your next concern.

Storage policy

According to NetApp documentation and VMware HCL, the preferred policy for ESXi 4.1 hosts connected to NetApp FAS3020, is using ALUA with a Round Robin policy. Normally I would have made sure that right after installation the correct storage policy was set. However, in the storage design that was already present, there is one big volume dedicated to VMware and that volume holds a number of LUNs that are presented to the ESX hosts. This volume has a one to one mapping to the ESX iGroup. On NetApp ALUA can only be activated at iGroup level, which means ALUA is activated for all the LUNs at once. Would I have enabled ALUA right after the first host was running ESXi 4.1, all the ESX 3.5 hosts (pending upgrade) would also have received the ALUA policy. Since I could not find firm confirmation that this would or would not be an issue and the consultant supporting their NetApp environment said to not enable ALUA for the 3.5 hosts, I decided to switch to ALUA after all hosts were running ESXi 4.1.

Virtual Storage Console

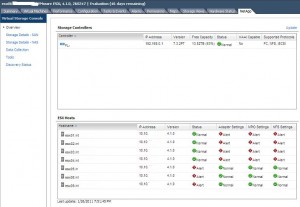

For earlier versions of ESX, NetApp has built the ESX Host Utilities Kit. This was an agent that would be installed in the console. For ESXi 4.1 there now is the Virtual Storage Console 2.0 that only needs to be installed on the vCenter Server and not on the hosts itself. After install it is then immediately available for all VI Clients by clicking the NetApp tab in the VI Client.

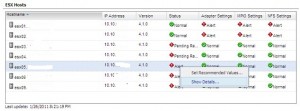

Through the Virtual Storage Console an admin can quickly see if the storage policies for the hosts have been set correctly. See image below:

In the above example you see that for example for the first host the adapter settings, MPIO settings and NFS settings are not set correctly. Instead of switching to your host and setting the correct policy for each LUN on each host, you can now click on the host in the Virtual Storage Console and have the correct settings applied automatically.

Some GUI problems in the Virtual Storage Console

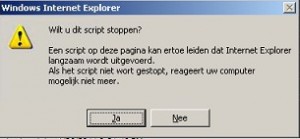

Although the Virtual Storage Console is a very handy tool, I did have some problems with it. Luckily these are only GUI problems and the storage was running fine all the time. The problems I ran into was that the GUI is very slow. Clicking on any of the status alerts and asking for more details takes forever. Actually, it is so slow; I receive at least two warnings by Internet Explorer each time, asking me if I want to continue because the script is no longer responding:

After the page is finished, a full report is presented in which you have to search for the info you are looking for, which makes it unclear what the real issue is. Biggest problem here is that in the report some values are colored green and some are red to indicate a problem. Since a lot of people (mostly men, including) have some form of color blindness, it is difficult to see what is green and what is in red.

Dear NetApp, please change this. It would be great to just quickly get an error report, but also get an extensive report like the one above. But scrolling through all these lines every time again and waiting for the report to be generated isn’t what I’m looking for. Also change warnings or alerts into an exclamation sign.

Let’s get to work

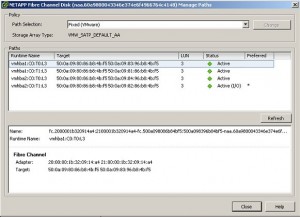

Now let’s see how the change was performed. I started with the whole cluster of ESXi hosts connected to 9 datastores, each datastore connected to one LUN, all part of just one volume. On all hosts ESXi the storage array type policy (SATP) was automatically detected by ESXi and set to VMW_SATP_DEFAULT_AA with a “Fixed (VMware)” path selection policy (PSP).

All VMs are running and the change will be performed without downtime to the VMs, but because I wasn’t sure if this process would be 100% error free, I decided to perform the change outside office hours.

To perform the change, I followed the blogpost Nick Triantos wrote with some minor changes:

1 Check Version

First I made sure I was running a supported ONTAP version, such as any version above 7.3.1. Logged on the NetApp console using SSH and ran the following command:

version

2 Enable ALUA

Next step was to enable ALUA on the ESX iGroups on each NetApp controller. First make sure what the current setting of that iGroup is, then enable ALUA and again check the current setting. I ran the following commands:

igroup show -v <group>

igroup set <group> alua yes

igroup show -v <group>

After ALUA is enabled I received this output:

<group> (FCP): OS Type: vmware Member: 21:00:00:1b:32:10:27:3d (logged in on: vtic, 0b) Member: 21:01:00:1b:32:30:27:3d (logged in on: vtic, 0a) ALUA: Yes

3 Reboot

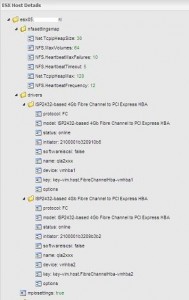

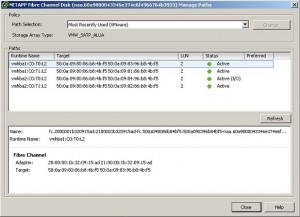

This is where I stopped following the guide from Nick. The NetApp filer now reports that ALUA is enabled to the ESXi hosts, but this will only be detected by ESXi after a reboot. To reboot the ESXi host, I set it to maintenance mode, rebooted it and checked if the host had now switched to VMW_SATP_ALUA.

4 Set the Path Selection Policy (PSP)

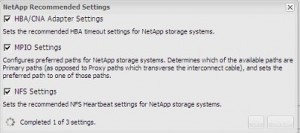

You might have noticed that although the host has now switched the SATP to VMW_SATP_ALUA, the Path Selection Policy is still at “Most Recently Used (VMware)”, which isn’t what I was aiming for. Round Robin is what I want. To make this change, I switched to the NetApp tab in the VIC client (Virtual Storage Console) and click on the host you’re working on. Now click and select “Set recommended values”. In the next screen select the desired settings to be applied:

I selected all three options and waited for the change to complete. In the NetApp logging you can see the settings being applied:

After these settings had been applied, a reboot of the host was done again.

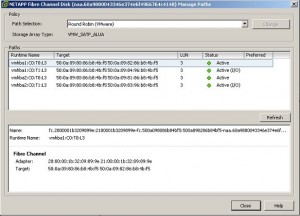

5 Check the path selection policy

After reboot, I checked to see if the Path Selection Policy was now correctly set. I also checked the Virtual Storage Console for warnings. The update of this view can sometimes some minutes, even after you clicked “update” in the upper right corner.

After the settings for the ESXi host were correct, I proceeded to the next host. In some situations a warning remained about “Reboot pending” when checking the Virtual Storage Console, but I just went ahead. Eventually all warnings cleared automatically without further intervention.

“On NetApp ALUA can only be activated at volume level, which means ALUA is activated for all the LUNs at once.” Per igroup?

You’re right. Changed it. Thanks.n

Now you should upgrade to OnTap v8.01 to benefit from VAAI as well ;)

FAS3020 cant’t run 8.0.1 :(

Well, it can’t run 8.0.1 and be supported by NetApp. You can actually use the code for the 2040. Just make sure the CF card in the 3020 is bigger than the 256MB one shipped with the system initially. If you do get a new one make sure it is formatted in FAT16 as FAT32 will throw an error.

Excellent walk through!

I am little confused. If I use 2040 Ontap 8.0.1 code on 3020, will it work? Read that 8.0.1 is only 64bit hardware compatible.

Great article, very informative.

Does this also apply to ESXi 4.0.0, 332073? We “seem” to be having performance issues and I wonder if this is one item that could be related? I have a similar screen within VSC

Well you should check the VMware HCL to see what NetApp and ESXi combination match to what PSP policy. Go to:

http://www.vmware.com/resources/compatibility/search.php and click “What you are looking for” and change it to Storage/SAN

I’m actually curious about this also. Has anyone actually done this successfully?

FAS2020 is 32-bit. It will not run ONTAP 8.x, it has Celeron inside.

FAS2040 is capable to run ONTAP 8.x, it has Xeon.