You find yourself sitting at your desk on a Saturday morning waiting for the cloning of a VM to finish. It seems to take forever and I just wished I could run VSAN in my homelab to get some more speed out of my kit. I have tried to run VSAN on my home mode ESXi hosts, but my RAID controller is not compatible (yet) with VSAN, so I had to remove it again and now I’m waiting for the official release.

While waiting I browsed through some VMworld posts in my twitter feed and there was a link to William Lam’s post about his Barcelona session: “VMworld Barcelona #NotSupported Tips/Tricks for vSphere 5.5 Slides Posted”. Browsing through his slides, I got a really stupid idea. What if I would run Virtual ESXi with VSAN included in my home lab, would it be faster than my current NFS NAS that keeps me waiting forever? The virtual ESXi would only be used for offering the VSAN datastore to the physical ESXi hosts in the cluster there is no need to run VMs in the memory of these virtual ESXi hosts. Hmmm, sounds like a plan to test this config, let’s do it.

Disclaimer: Don’t use the data I got from my tests as a reference for anything. My kit is not good enough to produce serious numbers that you can relate to business environments. This is testing just for fun and for me personally to see if I can get some more speed out of my current environment even it means setting up this idiotic configuration.

To start with, this is what I have in my home lab:

– 2x ESXi host with 32GB each and Intel i5-3470 CPU Quad core

– Iomega IX4 200D NAS configured with NFS offering 2.7TB of storage.

– Disks on each host:

- SATA 640GB 16MB Cache, 5400 RPM, WD6400AACS-00G8B1, CAVIAR GREENPOWER 640GB

- SATA 400GB 16MB Cache, 7200 RPM,

- Samsung SpinPoint T166 HD403LJ SATA 256GB SAMSUNG SSD 830 Series

On one of the physical ESXi hosts I have a Windows 2012 Server which gets its storage from the IX4. This is my test VM on which I run IOmeter on the F-drive with a 4GB test file.

Windows 2012 Server (Test VM):

– 1 vCPU

– 1500MB RAM

– C-drive and D-drive of 40GB

– F-drive of 10GB on separate paravirtual SCSI controller

Testing performed:

– Using iometer

– Each test is 300 seconds

– Size of the test file is 4GB

– Test specifications:

- Max throughput 100% read

- RealLife 60% random and 65% read

- Max throughput 50% read

- Random 8k 70% read

Test sequences

I did a number of test which I describe below:

- The test VM that is running IOmeter is fully running on the IX4-200D NFS volume.

- The test VM that is running IOmeter is fully running on the physical SSD of the physical ESXi host.

- The VSAN ESXi host has the virtual SSD disk on the local SSD disk of the physical ESXi host and has the virtual SATA disk also on the local SSD disk of the physical ESXi host.

- The VSAN ESXi host has the virtual SSD disk on the local SSD disk of the physical ESXi host and has the virtual SATA disk on the IX4-200D NFS volume.

You see that in step 3 and 4 I’m moving the data disk that offers the real storage to the VSAN. With VSAN this is the disk that should be relieved from heavy reads and writes by using the VSAN technology, the SSD read and write cache.

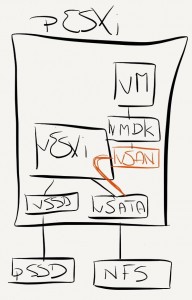

In the above image you see the situation of Test04 and how the pESXi (Physical ESXi) host has a pSSD (Physical SSD) and an NFS datastore to offer to the VMs running in the pESXi host. Inside that pESXi host I’m running a virtual ESXi (vESXi). That vESXi host is offered a virtual SSD (vSSD) which is running on the pSSD and a virtual SATA disk (vSATA) running on the NFS datastore. These vSSD and vSATA disks are then used by VSAN in the vESXi host and offered as the vSAN Datastore to all the hosts in the cluster of which the vESXi host is a member. And finaly, in memory of the pESXi host, there is my test VM getting running its VMDK on the vSAN datastore. Inception to the max…..

The results

For my little home lab the most important thing is the comparison between the first test (all on the IX4) and the fourth test where I have the VSAN sitting between the IX4 and the VM. Looking at the results of the “Reallife 60% random and 65% read test”, I can see an improvement of 71 to 3865 IOPS and a throughput gain from 1 MBps to 30 MBps. That is a big improvement for a cheap home lab like this.

Even though the VSAN will cost me some overhead in RAM on the physical ESXi host since it has to run the virtual ESXi of 4GB, this configuration will bring me 2.7TB of pretty fast storage if I make the VSAN SATA disk as big as the storage available on the IX4. Of course there is the added risk of losing all VMs I put on the VSAN, if that one VSAN virtual SATA disk would fail, but hey we’ve got to be living on the edge a little don’t we.

Another option I have is to run the VSAN on the SATA disks of the physical host, which are the results shown in test number 3. That will give me a little better performance than when the SVAN SATA is on the IX4, but the difference is very small. The small difference can be explained since probably everything is written to the cache on the virtual SSD. In this configuration I don’t have the full 2.7TB available.

The fastest solution is of course to run everything on my physical SSDs on the physical host, but that will give me only 2x 256GB of capacity.

For me, putting the VSAN in front of the IX4, even by using virtual ESXi hosts that don’t have to run a VM load themselves, will greatly improve the performance of my IX4 and it will give me the opportunity to get more experience with the VSAN product even though I don’t have the proper RAID controller that is supported with VSAN.

The numbers

Remember: Don’t use them for any performance reference, I just use them to see the difference in performance in my setup.

Max throughput test with 100% Read

| Max Throughput-100%Read | IOPS | MBps | Average Response Time |

| Test 01 | 3089 | 97 | 19 |

| Test 02 | 15243 | 476 | 4 |

| Test 03 | 8710 | 272 | 6 |

| Test 04 | 6649 | 208 | 9 |

Real Life 60% Random and 65% Read

| RealLife-60%Rand-65%Read | IOPS | MBps | Average Response Time |

| Test 01 | 71 | 1 | 842 |

| Test 02 | 10233 | 80 | 6 |

| Test 03 | 4196 | 33 | 13 |

| Test 04 | 3865 | 30 | 15 |

Max Throughput with 50% Read

| Max Throughput-50%Read | IOPS | MBps | Average Response Time |

| Test 01 | 1865 | 58 | 32 |

| Test 02 | 11550 | 361 | 5 |

| Test 03 | 2382 | 74 | 25 |

| Test 04 | 2363 | 74 | 25 |

Random test with 8K – 70% Read

| Random-8k-70%Read | IOPS | MBps | Average Response Time |

| Test 01 | 47 | 1 | 1248 |

| Test 02 | 30023 | 235 | 2 |

| Test 03 | 4146 | 32 | 14 |

| Test 04 | 3777 | 30 | 15 |

Can’t wait for the official release of VMware’s VSAN….

Interesting, what about vFlash instead of VSAN? I’m waiting on my SSD for my home lab and was planning on adding vflash on my config. Your VSAN config will definitely help in getting my feet wet with some VSAN action. Thanks

For me the pain is in the write performance of my NAS, therefore I used VSAN because the VSAN has read and write caching. vSphere Flash Read Cache is, like the name says, only Read Cache.

Recently I was extremely low on money and debts were eating me from all sides! That was UNTIL I decided to make money.. on the internet! I went to surveymoneymaker dot net, and started filling in surveys for cash, and surely I’ve been far more able to pay my bills! I’m so glad, I did this!!! – kvqx