Customer with small vSphere environment of just two hosts had performance issues and they asked me to investigate the situation. When looking at the technical specs at first glance, you would suspect that this configuration should work. With just two hosts, each dual Quad core CPU, the enivornment had a total of 16 CPU cores. In total there were only 9 VMs running using a total of 23 vCPUs. Usually you can easily run 5 vCPUs per core, if not more.

Turned out there were a number of VMs with 4 vCPUs and 2 vCPUs that really didn’t need them. You can easily discover this by checking READY en CO-STOP value in the vCenter performance charts. Go to the performance tab of your vSphere host and select advanced. Then select “Chart options” go to the CPU section, choose the “realtime” section and then on the right deselect all counters and only select “Ready” and “Co-Stop”. Click OK.

READY: The time a virtual machine must wait in a ready-to-run state before it can be scheduled on a CPU

CO-STOP: Amount of time a SMP virtual machine was ready to run, but incurred delay due to co-vCPU scheduling contention.

I made a list of all VMs, their vCPUs and checked per VM what their real CPU usage was over the past weeks. None of the VMs had excessive CPU usage and I noticed that downsizing the vCPUs wouldn’t be a problem. Unfortunately, this had to be done outside office hours. The vCPU assignment when I started investigating, remember each ESXi host has only 8 cores:

| Current situation | esx01 | esx02 |

| VMs | CPU | CPU |

| Mmgt | 1 | |

| Citrix 1 | 4 | |

| vCenter | 2 | |

| Application | 4 | |

| Develop | 1 | |

| Exchange | 2 | |

| DomainController | 1 | |

| SQL | 4 | |

| Citrix 2 | 4 | |

| Total | 12 | 11 |

So I had two big Citrix VMs that where in use, but not heavily used, with each 4 vCPUs. An application server with 4 vCPU and a SQL server with 4 vCPU. The application server and SQL server had peaked to a max of 1 GHz in the past weeks. That is ONE GHz for a 4 vCPU VM. A bit overdone don’t you think?

Since I had to wait untill evening before I could down size, I decided to go for a small quick win and shuffle the VMs around, to create the following situation:

| After VMotion | esx01 | esx02 |

| VMs | CPU | CPU |

| Mmgt | 1 | |

| Citrix 1 | 4 | |

| vCenter | 2 | |

| Application | 4 | |

| Develop | 1 | |

| Exchange | 2 | |

| DomainController | 1 | |

| SQL | 4 | |

| Citrix 2 | 4 | |

| Total | 8 | 15 |

I put the two Citrix VMs together on one host, which would stop the ‘fight’ for free CPU cores. The second host now did get a bit more vCPUs to handle, but since the two big VMs didn’t need that much CPU time I figured it would be enough capacity on the host to at least make it to the evening without issues.

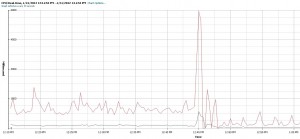

As the following image shows, the READY and CO-STOP values before and after the VMotion at 12:45PM. You can clearly see a big drop in READY and CO-STOP.

Later in the evening I would change the number of vCPUs for the vCenter Appliance VM from 2 to 1, the Application VM from 4 to 2 and the SQL VM from 4 to 2 vCPUs.

| After vCPU change | esx01 | esx02 |

| VMs | CPU | CPU |

| Mmgt | 1 | |

| Citrix 1 | 4 | |

| vCenter | 1 | |

| Application | 2 | |

| Develop | 1 | |

| Exchange | 2 | |

| DomainController | 1 | |

| SQL | 2 | |

| Citrix 2 | 4 | |

| Total | 8 | 10 |

As the following image shows, you can again see a drop in READY and CO-STOP values. At 9:20pm both SQL and the Application server were shutdown and started again with 2 vCPUs. At 9:55pm vCenter was robbed from its 2nd vCPU.

Conclusion: Think twice before you give VMs extra vCPUs which they don’t really need. You can negatively impact the performance of your environment since the vmkernel has to try and find a time slot in which it can give all vCPUs access to the physical cores.

And now you have an unsupported vCenter config?

Great articl

Is there a typo in the 3rd table, should it not have 2 for vCPU count on the Citrix VMs leaving a total of 4 on ESX01?

Actually, when reading through the install guide, there is no mention of required number of vCPUs for the vCenter virtual appliance, see: http://pubs.vmware.com/vsphere-50/index.jsp?topic=%2Fcom.vmware.vsphere.install.doc_50%2FGUID-7C9A1E23-7FCD-4295-9CB1-C932F2423C63.html But I do get your point. This customer has no license for DRS, only uses HA and sometimes performs a VMotion. Should I really run into strange issues, I’d be happy to add a 2nd vCPU to the vCenter appliance and proof that missing that vCPU isn’t causing the problem. So, according to the docs I am running a supported config. I do agree that it might be disputed if one vCPU on the appliance is supported. The risk of this causing issues in such a small environment is negligible.

No, I left the citrix VMs at 4 vCPUs since they really seemed to be using them. Putting them on a host where there is no CPU overcommit gives best performance.

The vCenter doc says at system requirements “2 64 bit CPUs”. Not sure how much more clear it needs to be? It makes no distinction between installable or appliance.

See: http://pubs.vmware.com/vsphere-50/topic/com.vmware.vsphere.install.doc_50/GUID-67C4D2A0-10F7-4158-A249-D1B7D7B3BC99.html

It reads “vCenter Server hardware requirements” – “Two 64bit CPUs”

and a little further down: “Hardware Requirements for VMware vCenter Server Appliance” – nothing about CPU requirements, only about memory and disk.

I know it is just a word game :-)

Great blog post. I’m really glad you included the performance screen captures and the VM breakdown.

Were these machines Nehalem or Westmere? If so did you consider enabling Hyperthreading?

Hyperthreading was not available. And even though HyperThreading could have given more breathing room here, it wouldn’t really solve the issue of having too many vCPUs.

Well, it would have given you 16 execution units for the scheduler to play with and the VMWare scheduler is HT aware and so any idle cores would be scheduled on the HT units meaning you wouldn’t take a performance hit. I’ve seen it increase performance significantly in exactly the scenario you outlined which is why I brought it up. Many admins disable HT based on Netburst era recommendations, but with Nehalem and beyond the recommendation has changed to always enable it unless you have a 99.9% application where you can benchmark out an actual performance degradation for having it enabled.

Yes, since the new HT you will always get a performance gain. Although it can vary between 1% and 15% sometimes even 30%. Nevertheless I think even with lots of processing power, you should still not assign too many vCPUs to your VMs. That is the point I wanted to make in this blog post: Don’t just throw more vCPUs in it, think before you add vCPUs.

Thats made me think twice about building OTT resourced VMs! Can you tell me how I can get the co-stop counterm it’s not showing?

In vCenter 5 it is available as one of the CPU performance counters. You can also find it when logged on to an ESXi host using esxtop ( CSTP )

You can see it via esxtop as %CSTP but yes, it doesn’t seem to be in the vSphere Client (I have v5.0.0 with v4.1 hosts).

On vCenter5 with ESXi 5 host: In VI Client click on a host, performance tab, advanced, chart options, CPU realtime, Counters and there is Co-Stop. Maybe because your host is 4.1 you don’t have the Co-Stop counter.

Great article! I’ve always been conservative with vCPU’s due to horror stories from back in the days before “relaxed co-scheduling” was introduced, but I’ve never really seen much data to support it. Thanks for the detailed analysis and screen shots!

Good post Gabe. Many people overlook checking for CPU Ready state when they see low CPU utilization, easy to do and something I’ve seen many a time.

Keep the vCPU’s lean and mean! :)

Cheers,

Simon

So if I understand this correctly the ready reading should be lower than the co stop value? I have vms with 6 vCPU and ready value is three times higher than the co stop. Admittedly the servers are not doing much yet but I soon expect them to be used heavily.

Quick Question. DRS takes this performance metrics into consideration? ;)

Great article Gabe[I’ll refer to you as Gabe now].

Quick question: If I am noticing high vCPU utilization on my Perfmon[~90%] under stress but low CPU utilization on the node level [physical cpu ~30-40% utilized] AND very good START and CO-STOP times, is it a cause for concern?

It could be that inside the VM there is a process polling for input. Old 16bit DOS programs are known for this, but it can also be a Windows application. As long as host cpu isn’t affected and performance seems good to the end-user, there is not much to worry about. But I would do some investigations on which program is causing the high utilization.

Veeam produces really great apps (monitoring/backup), just be sure to stick with the last stable version opposed to the newest release :)

Sooooo .. With what version of ESXi was this analysis done? I don’t see it in the article.

Relaxed co-scheduling is supposed to take care of this; I wonder if it was repeated utilizing VMware ESXi 5.1 w/ latest VMware tools on each guest, etc…if the same issue would occur.

If i decrease CPU numbers in virtual machine will affect my system ?

am using now 4 CPU’s . Number of virtual socket is 2 and number of cores per socket 2 = total 4.

now i want to use total 2 CPU please help me at the earliest.

Thanks

veera .

Thank you for reading my blog and adding a comment.

When doing calculations I always work with cores, physical CPU is not very important (but still a little important). For a VM it doesn’t matter if you choose virtual cores or virtual CPUs. These are just for licensing issues with SQL or Oracle.

When you are downsizing the VM in numbers of vCPU, you should always keep an eye on how the performance of that VM is. What my post is about is that you shouldn’t just throw a lot of vCPUs in a VM because if those vCPUs are not very busy, they only occupy CPU time and hinder other VMs because of that.

Your comment isn’t fully clear on what you want to achieve. Can you send more details on what you want to do?

Thanks Gabrie.

I have one more issue with VM,s.

1) My datastore space was 101gb

2)i created a VM and assigned 60gb of space from datastore.

3)After deleting the same VM am not getting back datastore space.

FYI.

am not seeing any VM related file even browsing the datastore.

and i did refresh lot of times but no progress.

Thanks.

veera.

vCenter Server runs on Windows Server and demands more.

VMware vCenter Server Appliance runs on a custom Linux and is lighter.

They are two different things, although they (mostly) provide the same servce.

Veeru, that’s because your array hasnt claimed back the dead space that was created by you deleting the VM. if your array had VAAI integration, it would have happened automatically. Check with your storage vendor, it may just be a case of a f/w update (if not a licensing issue).

I have a VM setup with 2 sockets and 2 cores per socket for a total of 4 CPUs. If I wanted to reduce this to 2 CPUs would it be better to have 1 socket with 2 cores or 2 sockets with 1 core each? Or is there really a difference?

Hi, sorry for the late reply, it’s been a busy week.

No, it doesnot matter for performance wether you use 1 socket with 2 cores or 2 sockets with 1 core, in total you still have 2 vCPUs. See this excellent post by Frank Denneman: http://frankdenneman.nl/2013/09/18/vcpu-configuration-performance-impact-between-virtual-sockets-and-virtual-cores/

Also check your Microsoft licensing. In our datacenter we switched from 4 vCPU = 4 sockets with each 1 core, to 4 vCPU = 1 socket / 4 cores and it saved us quite some licensing costs :-)

Regards

Gabrie

Perfect!

Thanks you!

This is very useful. It almost certainly explains problems we had been having where web servers that were using very little cpu would respond to a small increase in load with huge slowdowns, massive load averages, yet 99% idle time. So my question is how to implement applications such as web servers where there may be high demand peaks (think Reddit). If we can’t give the machines spare capacity in advance because of this behavior that only leaves horizontal scaling. Or is there some way to assign excessive VCPUs to a VM in some way that they behave the way you would expect if they were physical?

What you could do is create CPU affinities, but that is considered bad practise since you can’t VMotion those VMs anymore. Another option is to set CPU reservations, but that works on MHz not at vCPU / Core level.

Thank you for the article!

I would like to ask a different question though about the host scheduler.

More or less, the host scheduler uses the MhzPerShares(MPS) metric to schedule VMs. This means that, according to the VM shares, the scheduler selects the VM with the lowest MPS to be scheduled, thereby the VM with the lowest utilization of its resources.

However, considering that a VM can be scheduled initially with fewer resources than provisioned, it raises the question what number of shares the host scheduler uses to calculate MPS. The shares that are being used by the VM the current moment or the nominal number of shares the VM has been provisioned?

Hi, I honestly don’t know :-(