Putting your storage to the test

Doesn’t everybody want to brag about how fast their storage is? How they configured and tweaked for hours to squeeze the last bit of IO out of their storage? But what does it mean when someone says: “My box can do 1200 IOPSâ€. Is that read speed, write speed, what block size, is it a mix of reads and writes?

Recently I received an Iomega IX4-200D from Chad Sakac ( http://virtualgeek.typepad.com ) (Thank you very much Chad!!!) and I of course wanted to put it to the test and see how many IOPS the IX4 could give me. Biggest question was however: “How to test it thoroughly and in a sensible wayâ€.

Iometer

Searching for proper documentation on this, I stumbled upon the “Open unofficial storage performance threadâ€Â on the VMware Communities forum. This thread, originally started by ChristianZ, is running for quite some time now and is very popular since many forum members contributed to the thread showing the performance results of their storage. Christian has created a performance test using the tool iometer which will test your storage using four different testing patterns.

- Max Throughput 100% read. In this test iometer will use 32KB blocks and perform 100% read actions for 2 minutes in a 100% sequential way.

- Real Life test. In this test iometer will use 8KB blocks and perform 65% read actions (35% write) for 2 minutes in a 40% sequential way and thus 60% random way.

- Max Throughput 50% Read. In this test iometer will use 32KB blocks and perform 50% read actions (50% write) for 2 minutes in a 100% sequential way.

- Random-8K-70%Read. In this test iometer will use 8KB blocks and perform 70% read actions (30% write) for 2 minutes in a 100% random way.

Another test I found was at the ReadyNas website with an even more extensive version of this test called the “Super ATTO Clone patternâ€. This test performs a number of read and write actions that are fully sequential but differ in block size. Starting with read of 0.5K blocks, the block sizes doubles each run to a max of 8MB block sizes. There is a similar write sequence this test performs.

Note to author of the Super ATTO Clone pattern: After downloading the zip file containing the config file, I was unable to find what link I downloaded your test from. Please e-mail me so I can give you credit for your work.

Purpose of the test

It’s always good to know what you want to proof when testing. From my tests I want to learn the following:

-Â Â Â Â Â Â Â Â Â Max performance of the IX4-200D when used for ESX storage

-Â Â Â Â Â Â Â Â Â Performance differences between iSCSI and NFS

Test setup

The Iomega IX4-200D in my lab, has 4 disks (ST31000520AS) of 932GB each and I created a Raid5 volume on it, which leaves me 2.7TB effective storage. On this volume I created a 1.5TB iSCSI volume that was presented to all three ESX hosts in my lab. The disk write cache setting is enabled.

The ESX hosts are all running vSphere 4U1 (build 208167). When performing a test I made sure that the VM was the only VM running on that host. The only exception is the test where I run the test VMs on all three hosts at once. In that situation the first ESX host would run 5 VMs that are needed for my basic environment but they draw almost now performance at all. I also couldn’t spot differences in results between the VM running on the loaded ESX and the VMs on the empty ESX host.

Each test VM was a clone of the first test VM running Windows 2003 Server, which has 1024MB RAM, 1 vCPU, a thin provisioned boot disk of 10GB connected to the LSI Logic virtual controller and a thin provisioned 50GB disk connected to the Paravirtual SCSI controller. The file system on the disk is NOT aligned; the VMFS volume on the iSCSI target is aligned by vCenter during creation. On the same 2.7TB volume the remaining space is used for a NFS volume, also presented to the ESX hosts. All devices (ESX hosts and IX4-200D) are connected over 1Gbps links through a Linksys 8 port SLM2008 switch.

Test preparation

Since my ESX hosts are white boxes and my 1Gbit switch is a SoHo switch I wanted to rule out that one of them (or both) would be a limiting factor. I therefore performed a series of test to proof that the IX4 would give up before my network, ESX host or VM would.

On ESX02 I started VM01 (disks on the iSCSI volume) and performed the first set of 4 tests. Then I started VM02 and VM03 on the same ESX02 and ran the same set of tests. The combined throughput of all 3 VMs was at 92% of the throughput of the single VM with each VM performing at 1/3 of total. The last test I ran was to run all three VMs on separate ESX hosts. The combined throughput was again 94% of the max throughput of the single VM and again each VM performed at 1/3 of total. In other words, no matter how you spread the load I never got a better throughput than on the single VM running alone. From which I draw the conclusion that my network isn’t the bottleneck, nor are the ESX hosts or the VMs.

Now for the iSCSI results

The moment we’ve all been waiting for. First test I performed is with VM01 running on a completely free ESX host (no other VMs on that host) and no other action taking place on the IX4-200D. I ran the four tests I mentioned in the beginning.

| Test | Description | MB/sec | IOPS | Average IO response time | Maximum IO response time |

| Test 001a | Max Throughput 100% read | 55.058866 | 1761.883723 | 35.021015 | 207.740649 |

| Test 001b | RealLife-60%Rand-65%Read | 0.696917 | 89.205396 | 663.790422 | 11528.93203 |

| Test 001c | Max Throughput-50%Read | 22.040195 | 705.286232 | 83.689648 | 252.396324 |

| Test 001d | Random-8k-70%Read | 0.505056 | 64.647197 | 913.201061 | 12127.4405 |

The following results are from the “Max Throughput 100% Read†test, performed on three VMs at the same time, each VM running on a single ESX host.

| Test | MB/sec | IOPS | Average IO response time | Maximum IO response time |

| Test 007 – VM01 | 17.217302 | 550.95366 | 110.365821 | 295.806031 |

| Test 007 – VM02 | 17.424422 | 557.581504 | 108.932939 | 371.429888 |

| Test 007 – VM03 | 17.313917 | 554.045346 | 110.181721 | 398.97054 |

| Totals | 51.955641 | 1662.58051 | n/a | n/a |

As you can see the total MB/sec and IOPS of the three VMs is at 94% of the performance of the single VM in Test 001a.

A more difficult test that most storage systems have problems with is performing a real life test. To see how much this would impact normal throughput, I ran the “Real Life test†on VM01 and the “Max Throughput 100% read†test on VM02 and VM03 with all VMs again on different ESX hosts.

| Test | Description | MB/sec | IOPS | Average IO response time | Maximum IO response time |

| Test 008 – VM01 | RealLife-60%Rand-65%Read | 0.638358 | 81.709782 | 696.166923 | 10919.88228 |

| Test 008 – VM02 | Max Throughput-100%Read | 4.115037 | 131.681186 | 460.754803 | 18938.20332 |

| Test 008 – VM03 | Max Throughput-100%Read | 4.518114 | 144.579644 | 417.7675 | 16263.77291 |

| Totals | 9.271509 | 357.970612 | n/a | n/a |

When comparing the results from Test 008 – VM01 where the VM would run a 0.63 MB/sec and 81 IOPS with the results from Test 001b, you can see that we still get 91% of the performance on this test, even thought Test 008 – VM02 and VM03 are running at the same time, demanding around 4 MB/sec and 130 IOPS each.

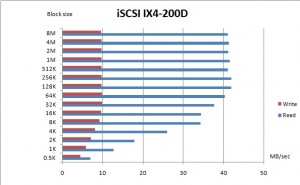

As last test I ran “Super ATTO Clone pattern†test that performs read and write actions with changing block sizes. This test can help you determine the ideal block size for the file system you want to run.

| Block size | ReadMB/sec | WriteMB/sec |

| 0.5K | 6.95 | 4.54 |

| 1K | 12.77 | 5.824 |

| 2K | 17.858 | 7.154 |

| 4K | 25.98 | 8.08 |

| 8K | 34.296 | 9.2468 |

| 16K | 34.41 | 9.652 |

| 32K | 37.686 | 9.828 |

| 64K | 40.271 | 9.84 |

| 128K | 41.862 | 9.712 |

| 256K | 41.918 | 9.689 |

| 512K | 41.011 | 9.725 |

| 1M | 41.443 | 9.713 |

| 2M | 41.093 | 9.719 |

| 4M | 41.241 | 9.703 |

| 8M | 41.006 | 9.687 |

When looking at the graph, you can see that block sizes below 64K are sub optimal. So in theory I could get even better performance in the “Max Throughput 100% read†test when I would run the test again but now using 64K blocks instead of 32K blocks. Well, for this test that might be true, but applying the same logic to the “Real Life test†which uses 8K blocks, wouldn’t be fair anymore since there you want to test real life performance and 8K blocks is considered real life behavior (more or less).

Conclusion of this iSCSI test is that I can read at 55MB/sec from the IX4-200D and have around 1761 IOPS in most ideal situation. Ideal, because you will seldom have only read actions on your storage.

The second conclusion I want to draw is that 64K is the optimal block size for the IX4-200D as this is the point where it reaches the best throughput when looking at read and write speeds.

This concludes my first part. In the next part I will show you the performance of the IX4-200D when using NFS and give you download links to the data I gathered.

Impressive and thorough.

What are the specs of this IX4-200 box ?

Any idea what impact caching might have ?

Curious to see the comparison to NFS.

Wow nice work Gab, I agree with lucd, can't wait to see the NFS comparisons.

Just wanted to make sure you were using MB and Mb intentionally in these tests.

I also have a IX4-200D, glad to see someone tested it, defnitely looking forward to the NFS tests.

Excelent job Gabe,

What is great is that you explain the numbers you got from the test to the readers.

The results should not be better when running NFS, but close, I'd say. It would be interesting at the end of your second part (or the third one?), to do a little compare to some lower ends iSCSI SAN curently used in entreprise storage.

Impressive and thorough.

What are the specs of this IX4-200 box ?

Any idea what impact caching might have ?

Curious to see the comparison to NFS.

Wow nice work Gab, I agree with lucd, can't wait to see the NFS comparisons.

To be honest, I have to double check the Kb/Mb/MB/Mbps usage every time again. I did an update. Thx for bringing this to my attention.

Hello Gab,

I just wanted to know the configuration of your switch (Traffic isolation, Jumbo Frame). Can you please detail your test setup on this ?

Thanks

Just wanted to make sure you were using MB and Mb intentionally in these tests.

I also have a IX4-200D, glad to see someone tested it, defnitely looking forward to the NFS tests.

Good article Gabe,

Excelent job Gabe,

What is great is that you explain the numbers you got from the test to the readers.

The results should not be better when running NFS, but close, I'd say. It would be interesting at the end of your second part (or the third one?), to do a little compare to some lower ends iSCSI SAN curently used in entreprise storage.

What block size have u been using for your VMFS volumes? 8MB?

VMFS volume was 4MB block size, but the VMFS block size has no influence on the read action of the VM. If Guest file system in the VMDK has 32KB block size, it will read 32KB blocks.

I've posted some thoughts on my blog since I rambled on a bit http://ewan.to/post/307847803/thoughts-on-iomeg…

Your tests were really interesting thanks for publishing the results. I'm a fan of the IX4-200d but the limitations you've found make me wonder about some use cases for it.

Do you think you could get time to retry the same tests in RAID10 mode as well as NFS? I know it's a hassle, but it seems to me the random workload tests imply something is broken with the IX4-200d in that setup of either iSCSI, RAID5, or something else. That maximum IO response time in test 001d is 12 seconds isnt it?

I made a type in the disktype. It's a ST31000520AS (3 zero's instead of 2) which shows its a 5900 rpm disk. Sorry about that.

Mail me for more details on NFS.

Thanks I'll update my post too, funny that the one you did write returns results from shops selling a drive too :)

To be honest, I have to double check the Kb/Mb/MB/Mbps usage every time again. I did an update. Thx for bringing this to my attention.

Hello Gab,

I just wanted to know the configuration of your switch (Traffic isolation, Jumbo Frame). Can you please detail your test setup on this ?

Thanks

Good article Gabe,

ATTO tool at: http://www.attotech.com/software/files/drivers/…

Running same tests with (4) SSD drives in the IX4-200D.. results coming in a few..

What block size have u been using for your VMFS volumes? 8MB?

I'm not using the ATTO test tool, just the Iometer configuration file.

Let me know how your tests went. Sent me an e-mail if you like.

VMFS volume was 4MB block size, but the VMFS block size has no influence on the read action of the VM. If Guest file system in the VMDK has 32KB block size, it will read 32KB blocks.

I've posted some thoughts on my blog since I rambled on a bit http://ewan.to/post/307847803/thoughts-on-iomeg…

Your tests were really interesting thanks for publishing the results. I'm a fan of the IX4-200d but the limitations you've found make me wonder about some use cases for it.

Do you think you could get time to retry the same tests in RAID10 mode as well as NFS? I know it's a hassle, but it seems to me the random workload tests imply something is broken with the IX4-200d in that setup of either iSCSI, RAID5, or something else. That maximum IO response time in test 001d is 12 seconds isnt it?

I made a typo in the disktype. It's a ST31000520AS (3 zero's instead of 2) which shows its a 5900 rpm disk. Sorry about that.

Mail me for more details on NFS.

Thanks I'll update my post too, funny that the one you did write returns results from shops selling a drive too :)

Very interesting post Gabe. Thanks.

Looking forward to the NFS comparison. BTW, I assume this was using a software initiator, not a TOE card?

SSD Results at: http://bit.ly/8pUoZL

I wish I had a better random IO test results, but I'm tired of messing with it for now!

ATTO tool at: http://www.attotech.com/software/files/drivers/…

Running same tests with (4) SSD drives in the IX4-200D.. results coming in a few..

I'm not using the ATTO test tool, just the Iometer configuration file.

Let me know how your tests went. Sent me an e-mail if you like.

SSD Results at: http://bit.ly/8pUoZL

I wish I had a better random IO test results, but I'm tired of messing with it for now!

I was just talking to a coworker about why Sun's 1U servers can be shipped with 6 sas drives, but only 4 SSDs. I told him the SSDs probably overwhelm the RAID controller. Perhaps there is some truth. This is an unfortunate result though, in that I really wanted to build one of these things up with SSDs. Somebody needs to try a Drobo now.

Hi Gabe, great post!

Why did you choose to NOT aligning the file system partition?

I ran ATTO test on a similar system (thecus Raid5 with 4 disks, virtual machine win2008 with aligned file system) obtaining about 35MB/s write and 75MB/s read with 64KB block size.

It could be interesting to see the same tests with aligned file system to see the difference..

Excellent post Gabe. We see more and more entry level NAS'es in HCL for vSphere, Iomega being the first one I guess.

I have also compiled and published a post on my blog regarding benchmarking tools available out there and especially IOmeter. I've created a baseline IOmeer.cfg config file based on a post from Chad.

As well I've tested my NAS device, a QNAP TS-639 and published some posts about it. Just have a read.

More at http://deinoscloud.wordpress.com/2009/12/30/ben…

BTW I found a new tool called Intel NAS Performance Toolkit. Give it a try…

Rgds

Didier

Happy New Year 2010

Thanks a lot for the data. The results are more or less in line with what I have seen in my test environment. A few comments/questions:

1. The ATTO profile led to 37.686 MB/s at 32K read, while test 001a produced 50.06 MB/s. That is a pretty big difference considering both are 100% sequential read. Any idea what the ATTO profile is doing differently?

2. The sequential write performance numbers seem to be really low (at least from the ATTO), did you happen to run a Iometer test with 100% sequential and 100% write at 32K? Would be interesting to see what write performance is like.

3. Since you followed the “open unofficial storage performance thread”, I assume you used the “Test Connection Rate” parameter in your tests? I found the parameter making a big difference because disk close operations have impact on cache flushing and prefetching.

4. The disk write cache setting on 200d didn't make much difference in my testing, the numbers were within 5% variation.

5. Does anyone know the value of parameter MaxXmitDataSegmentLength in VMware iSCSI initiator? The Iomega target has 8K for parameter MaxRecvDataSegmentLength, that's a pretty small receive buffer. I wonder if ESX has to negotiate down during session creation.

6. The 200d has a Marvell 1.2GHz processor, which might be the bottleneck in a random I/O workload. I also tested the Iomega ix4-200r, a rackmount product that runs a Celeron 3.0GHz CPU and also 4 SATA disks, random performance is about 3x there. Do you think CPU is very important in random I/Os?

Please share your NFS data when you have it. I suspect performance should be about the same as iSCSI, but really would like to see data.

I for one appreciate the insight. Thanks for taking the time to write about and share your process.