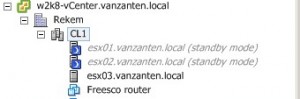

For my home lab I’m always testing several configs, creating VMs, removing VMs, reinstalling hosts and breaking them on purpose. Sometimes I have 40 VMs running and a week later I’m down to just 5. This week I stumbled upon something strange when cleaning up unused VMs. On my 3 hosts cluster I had enabled DRS and DPM and I also had HA active. Just a few numbers of VMs where running and as expected DRS started freeing host number 1 and then, thanks to DPM, host 1 was shutdown. Nothing strange here. But a few minutes later, host number 2 was freed too by DRS and DPM decided to shutdown host number 2 as well. This surely was unexpected.

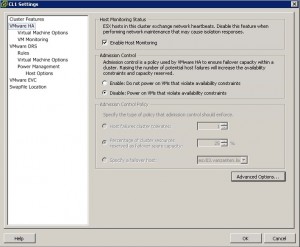

Checking my HA settings I remembered that for some tests I had set Adminision control to “Disableâ€. The text behind admission control says: “Admission Control is a policy used by VMware HA to ensure failover capacity within a cluster. Raising the number of potential host failures will increase the availability constraints and capacity reserved.â€.

When admission control is disabled, HA doesn’t have to check anymore if there are enough VM slots available on other hosts, but the issue here is that it also seems to forget to check if HA is possible at all. With just one host running, it will be impossible to trigger other hosts to boot if this one host suddenly fails.

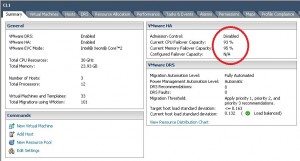

My first guess was that HA wasn’t running on that last host anymore, but the screenshots clearly shows that at cluster level HA is still active, also when forcing a second host to wake up, HA is not reconfigured on the host that was still running. To me it seems there is something going wrong here because in a small environment this can leave you with a situation that you think HA is protecting you in case of host failure, but in reality it isn’t.

The bug shows when the following conditions are met:

– In your VMware cluster DRS should be able to move all VMs to one host (without getting an imbalanced load)

– DPM has to be active

– HA has to be active

– and most important, HA setting admission control has to be disabled.

This might sound like a very unlikely scenario, but I do have a number of customers with just 2 or 3 ESX hosts, that disable admission control to be able to push some more VMs on the hosts.

Edit: Well, this always happens when you don’t need it :-) Last night before writing I checked the VMware KB on this, but it was unavailable unfortunately. Today Duncan pointed me to the following KB article: “Implications of enabling or disabling VMware HA strict admission control when using DRS and VMware DPM“. There the behavior is mentioned, though I still think it is wrong to not display any warnings.

Whoops, that does look like a bug. Did you report this to the big VMW? What's their reaction?

Check out this KB: http://kb.vmware.com/kb/1007006

It is working as designed. Because admission control is disabled DRS/DPM does not take violation of admission control into account. I agree that we should add a big comment/exclamation mark when this is the case though.

Hmm, so this is not a bug per se (see Duncan's KB link), but I do think this is a ui/usability failure. Practically nobody who's going to configure this situation (agreeing they might lose some performance in case of a node failure) is going to realize this are the implications. (At least I wouldn't have :), and neither did Gabrie)

Check out this KB: http://kb.vmware.com/kb/1007006

It is working as designed. Because admission control is disabled DRS/DPM does not take violation of admission control into account. I agree that we should add a big comment/exclamation mark when this is the case though.