Zerto http://www.Zerto.com/ (Zero RTO) is a startup company that I first heard about when they were presenting at the Techfield Day in Boston. All information was under embargo, which didn’t allow us delegates to publish about Zerto until June 22nd and so I had to wait with this post until now. The presentation done by Chen Burshan – Director Product Management and Gil Levonai – VP Products got me really excited about the product and I started playing with the beta. Below is an explanation of what Zerto does and some of my own experiences with it.

Full storage agnostic virtual machine replication

What Zerto promises is full replication of your virtual machines between different sites, in depended of the brand of storage and even on high latency WAN connections.

Zerto is an Israel based company and was founded by Ziv and Oded Kedem who also founded Kashya, which was acquired by EMC and is now the foundation of the EMC RecoverPoint product. After selling their company and learning from the good and bad about traditional BC/ DR solutions, Ziv and Oded used their knowledge to start working on Zerto. When designing Zerto the main thought behind the product was that replication should no longer be done at storage level, but should move to the next level. Zerto will prove today that you can move replication into the hypervisor and still keep all your functions of storage and hypervisor. Zerto is meant to be an enterprise solution, meaning it will be able to handle many virtual machines and work across geographically dispersed data centers.

In short what Zerto will offer is Virtual replication:

- virtualisation aware

- software only

- Tier-1 replication, RTO’s of seconds

- Enterprise class replication

- Purpose built for virtual environments

How does Zerto replicate

On each ESX/ESXi host with virtual machines that need to be replicated a virtual replication appliance will be running on the ESX/ESXi host.

The VRA uses the VMware APIs, which allows it to see all data coming through the IO stack and will then replicate it to the secondary site. It will only see the data in the IO stack and not interfere with the process of writing to disk. Should the VRA fail, ESX/ESXi will continue to operate normally and VMs will not notice any delays.

The virtual appliance makes sure the data is replicated to the virtual appliance on the ESX/ESXi host at the target host. This target can be locally or on a remote site. The data to be replicated will be compressed before sending it to the target using built-in WAN compression & throttling techniques. It can handle WAN disconnects or degradation automatically.

When replicating over WAN, it could take quite some time to finish the first sync between source and target if the source site is a few Tera Bytes in size. To save a lot of time, it is possible to first do a restore from your regular backup on the target site and then have Zerto perform a sync, saving you a lot of time.

The journaling

Source virtual machines can have thin or thick VMDK’s or a physical or virtual RDM and at the target site the target data can be in different places than the source. For example a database server on the source site has its VMDKs on different datastores but grouped in one datastore at the target site. At the target site all received data will be written into the target and a journal will be kept in a VDMK.

In case of a disaster on the source site, it is of course possible to switch to the current status of the target site, otherwise it wouldn’t be a disaster recovery product. However the journaling system used, makes it possible to go back in time and restore the status of one or two hours ago, maybe longer if you have the disk capacity for this. In many cases, real DR is not because of natural disaster, but mostly logical errors, therefore it is ideal to be able to go back further than just the latest status.

The journal holds all data changes and will take up extra disk space that you should plan for when determining how far back in time you want to go. An IO intensive application will of course generate a lot of data and therefore use quite some journaling space.

The journaling system also enables you to test-run a virtual machine on the target site, while still keeping replication running. While starting the replicated virtual machine for test purposes, replication data coming from the source site is still written into the target VMDK. Not only can the replicated virtual machine be tested for correct failover, it can also be used to create a small test environment for other purposes.

When replication fails

As explained above, on the source site every write that goes through the SCSI stack of the virtual machine is split into the memory of the virtual appliance and will then be replicated to the target. However when the link between source and target fails, the virtual appliance will start using a bitmapping technique to remember all the changes to be able to start replication as soon as the link is up again.

To prevent running out of memory in the virtual appliance the bitmap technique will store less detail the longer the link is down. What happens is this:

- Under normal operation the virtual appliance writes changes at the smallest block size to memory. If blocks 2, 5, 9, 13, 17, 20 have changed this is written into memory and removed from memory as soon as these blocks have been replicated.

- However, when the link fails and the number of changes to remember in memory keep growing, the virtual appliance decides to store less detail. In our example, the virtual appliance could decide to only remember that something changed from block 2 to 9 and 13 to 20.

- If the link stays down even longer, the virtual appliance might go even further and now only remembers that block 2 through 20 has changed.

While storing less and less details if the link stays down for a long time, the amount of data to be replicated after the outage will increase.

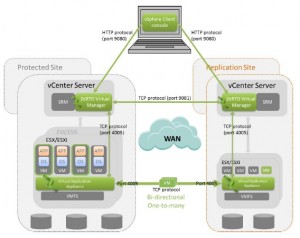

Zerto Virtual Replication architecture

The architecture of the Zerto Virtual Replication environment is actually rather simple. From the bottom we have the Virtual Replication Appliance. This is a debian based virtual machine that is able to see all SCSI writes by the VM and splits the write into its memory. Then it will try to replicate the blocks over to the Virtual Replication Appliance on the replication site, using TCP port 4005. It will also talk to the Zerto Virtual Manager over TCP port 4005 to keep it updated on all the stuff the VRA is doing.

The Zerto Virtual Manager is a small piece of Windows software that can be installed on the vCenter server, but can also be installed on a separate server, just as long as it can talk to vCenter. The Zerto virtual manager will monitor replication, manage site links, protection groups, protected VMs, etc. It needs to talk to the Zerto Virtual Manager on the target site and does this over TCP port 9081.

The last component is the vSphere Virtual Infrastructure client that runs the Zerto plugin and enables you to manage both protected and replication site from the same interface. It will run over HTTP port 9080.

Installation and protecting VMs in a few simple steps

The installation of the Zerto environment was actually very easy. I will walk you through it with a number of screenshots, just be aware that this isn’t a full step by step walk through.

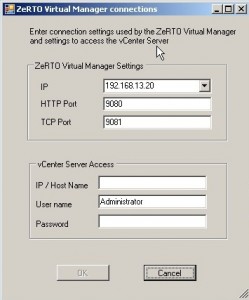

First you install the Zerto Virtual Manager on a Windows server. This is a very simple next, next, finish process. The only options you have to enter are the IP of your vCenter and the user account to be used to access vCenter.

After installation is complete you run the same install on the replication site. Next step to pair both sites. You can also choose to first install the VRAs but I went for pairing the sites. For this you go into the vSphere VI Client and at cluster or datacenter level click the Zerto tab. In this tab you see a fancy interface with very clear instructions. Either you click the big “Install VRAs” button or you click the “Pair…” button to pair your protection and replication site. Although the interface looks good, I’m not always happy with it. It requires adobe flash and doesn’t always give the proper response when you click a button. I want to see the button change when I click to prevent me from clicking it multiple times.

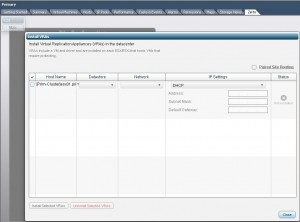

Pairing the sites is very easy. Enter the IP address of the Zerto virtual manager of the replication site and click PAIR. That’s it. Next we’ll install the VRA, the virtual machine that captures all the writes and does the actual replication. In the screen where you saw the option to pair the sites, you also have the option to install the VRA. Press the button and the next screen will ask you where to install the VRA to. You’ll see a list of ESX/ESXi hosts, datastores and networks to choose from. The VRA can use a DHCP address or fixed IP. After you selected the desired settings, you push the button “Install selected VRAs” and you wait. In the vCenter task list you’ll the progress of the installation.

Installation progress:

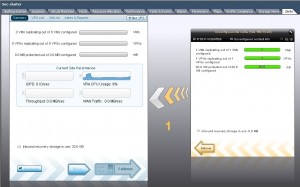

After this the Zerto framework is ready. All we need now is VMs to replicate. Replication is done based on Virtual Protection Groups (VPG). Each protection group contains one or more VM that you want to have the same set of replication and recovery settings. To create a VPG just click the “New VPG” button, add a VM and walk through the recovery settings, things like RPO Threshold, Maintain history, Max Journal size, what host or cluster to replicate to, which datastores to use, which networks, etc, etc.

Once this is done, your replication should start and can be seen in the Zerto tab at cluster level or for the specific VM at VM level.

Summary

As you see, this is a very easy way to setup replication between two sites and the fact that it doesn’t matter what storage you are using will not only be appealing to many SMB customers but also large enterprises. Playing with the beta of Zerto has shown me that this is a very nice product even though I couldn’t really stress test it in my lab environment. But I surely will do some more testing in a larger environment.

Some last notes I’ve written down during the Techfield Day event but haven’t mentioned yet in this post:

- Fully support vMotion, HA, vApp

- Group Policy and configuration

- VSS support

- HA boot orders, vApps, host affinity rules replicated as well

- Version 1 will be all vmware. On road map (months, max 1 yr) other hypervisors. Not sure what other hypervisors. They will be able to replicate between DIFFERENT hypervisors !!!!

- Protect both sites: Symmetrical replication. You can replicate both ways at the same time, within the same LUN.

- Working on multi-tenancy.

Be aware that the Tech Field Day event is fully sponsored by the companies we visit, including flight and hotel, but we are in no way obligated to write about the sponsors.

hows this different from veeam?

That is a completely different technique. Veeam replication will make a snapshot of a VM periodically and then replicate the delta and release the snapshot. Zerto is continues replication without making snapshots. If you would use Veeam replication to replicate let’s say every 5min, you would have continuously snapshot on snapshot off.

why not SRM?

With VMware SRM you need identical storage on both sites because the storage has to do the replication. So replicating from NetApp on primary site to EMC on secondary site is almost impossible (not SRM’s fault). With ZeRTO you can replicate independend of storage because it is one layer above your storage.

vSCSI APIs is not supported by VMW. They are not fully developed, they are meant for internal VMW consumption only. How does Zerto plan to address these issues? How do they plan to package, ship, patch and dynamically load their splitter when VMW doesn’t have the infrastructure to make that happen at the vSCSI layer?

Sounds like all the noise Zerto is making is based on something that is running in the labs only. How do they plan to really ship this in a clean way so that VMW support it?

Any idea on what pricing will be?

Are there any startup dependencies and/or priorities which can be defined (like SRM has)?

I am not sure what you refer to by “vSCSI APIs”.

The Zerto system interacts with vCenter through standard APIs, primarily the

vSphere API ( http://www.vmware.com/support/developer/vc-sdk/visdk400pubs/ReferenceGuide/ ).

The Zerto architecture does not require a so called “splitter” entity (I am

assuming that by “splitter” you mean something which forwards IOs to other

hosts or appliances).

Zerto has a VRA (Virtual Replication Appliance) that runs on each physical

host, eliminating the need to forward any IOs to other hosts.

Feel free to contact us at info@zerto.com

for additional information.

The article says in the beginning that you see data at the vSCSI layer. How is that possible unless you are running a filter driver at the vSCSI layer? And those filters are not supported by VMware.

Your appliance needs to see the IOs and needs to know the identity of the VM, VMDK, offset and length of the writes. Again, if you are not running at vSCSI layer, there is no other way you can obtain this info from ESX.

I think it would help if you clarify this. I know many other people who understand ESX well are asking the same question. I think you can create further awareness by answering this. And the link you have provided results in Page Not Found error.

The correct link is: http://www.vmware.com/support/developer/vc-sdk/visdk400pubs/ReferenceGuide/ There was a ). behind it, that is why it couldn’t resolve to a correct page.

Is that the same API as the VMsafe API? Is it compatible with embedded anti-virus agents?

No it is different from the VMsafe API (now called vShield Edge if I’m correct). It doesn’t have to be compatible with the anti-virus agents because it operates on a deeper level.

Could you use it with local server cache? I.e. like a Fusion-IO card. It might be a good way to implement a server resident write-through cache.

Also, with regards to VShield Edge (I think that VMsafe is a feature and VShield is the product) — if you are using that to intercept I/O to run an in-place virus scan and Zerto operates at a deeper level, might it replicate and backup a virus?

With SRM 5 you actually don’t need the same storage at both locations (although the scale of SRM in this format is limited) and SRM 5 doesn’t integrate into vCloud Director. On the other hand Zerto integrates into vCloud Director and scales to protect a larger workload.

I am very curious here as I am following what VMWDeveloper is asking. I have read a bunch on Zerto and see how specific language is used around “embedded in the Hypervisor” or “vSCSI” layer which I think is completely misleading. I don’t think anyone answered VMWDeveloper’s questions..

1. Are you running a filter driver at the vSCSI Layer?

2. If so, how is this officially supported?

3. Are you selling something that doesn’t have official VMware Support?

4. Reading through some other papers, you use a log of terminology that is specific to RecoverPoint. Is this the same product with just a different interface/UI?

It’s interesting you bring up RecoverPoint. EMC has just sued them — EMC thinks Zerto folks stole RecoverPoint IP. http://news.priorsmart.com/emc-v-zerto-l6r6/

Now I am beginning to wonder if they somehow got hold of ESX source code through un-approved ways and figured out a way to squeeze in a filter at the unsupported vSCSI layer of ESX.

The whole story sounds look a can of worms.

Why not just go for a storage hypervisor like Datacore SanSymphony which has a solid history and can handle dissimilar storage and replication?